11 September 2023

By Pascal LIBENZI.

It is common to hear the term "service Mesh" with the emergence of container orchestration, particularly with a microservices architecture.

As a reminder, the purpose of a service Mesh, as mentioned in our previous article about Cloud Native Applications, is to remove as much technical complexity from your microservices as possible, delegate most of the technical functionalities to the service Mesh, and avoid pitfalls that may arise from multiple implementations of the same standard functionality in all microservices.

These discussions lead to the question of which service Mesh is the best and why we would choose one over another. To make these discussions clearer and to help you in this choice, it is important to understand and create a list of what is expected from a service Mesh.

Indeed, choosing a service Mesh can sometimes be quite complicated, as all competitors in this field offer features that seem very appealing when operating Kubernetes) clusters on our infrastructure.

Yet, it is a crucial step in building your platform, and we therefore advise you to take the time to analyze the available solutions, conduct an in-depth analysis before making a decision.

The purpose of this article is to serve as a guide for such a choice. We describe here what can be expected from a service Mesh to compare market products.

A service Mesh is an infrastructure layer dedicated for managing communication between microservices within a distributed architecture. It operates at a low level of the infrastructure and can transparently act at the network layers 4 and/or 7 (L4=TCP transport layer, L7=HTTP application layer).

The service Mesh provides features such as communication security, traffic management, observability, service discovery, and fault tolerance.

We will deep-dive into the essential points to compare after reviewing the architecture of a service Mesh.

While a service Mesh can be used with various infrastructures, in this article, we will focus on its use within Kubernetes clusters as it is the most common use case.

As mentioned in the service Mesh definition, the architecture of a service Mesh can vary from one implementation to another. Let’s explore the architectures on which service Meshes are based.

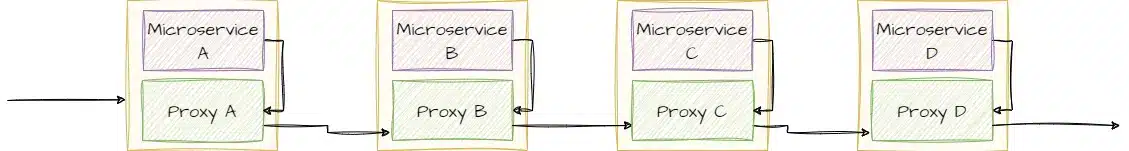

With this architecture, each container is along with a sidecar proxy, deployed as a container alongside the microservice within the pod. The proxy is responsible for managing network traffic between microservices.

When microservice A wants to communicate with microservice B, the traffic goes through the proxy, which intercepts the request and takes over the network-related tasks (routing, encryption, etc.). It then forwards the request to the sidecar proxy of microservice B, which also performs necessary operations, such as decrypting the message, and subsequently transmits the request to microservice B.

This data plane architecture allows fine-grained traffic management and advanced features at each sidecar proxy level. Each sidecar proxy can collect metrics, perform security checks, and more. The collection of all sidecar proxies constitutes the "data plane", which ensures communication between microservices.

The drawback of this type of architecture (which is not widely used anymore) is that the configuration management is done within each sidecar, making it challenging to maintain consistent configuration across the entire cluster.

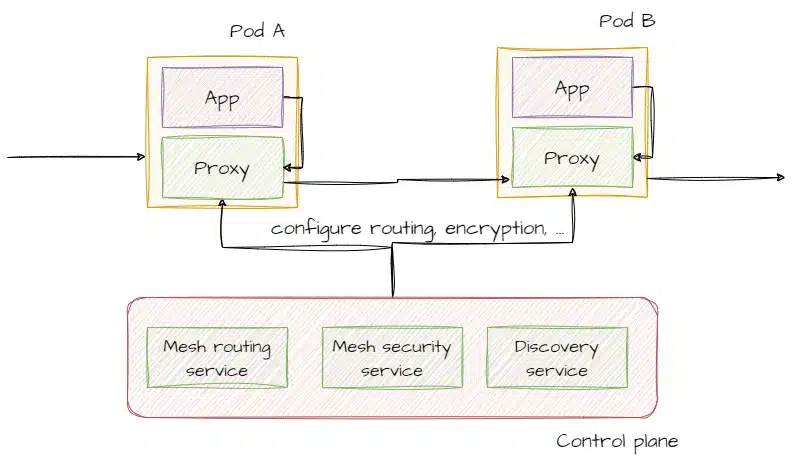

With this architecture, a control plane is responsible for configuring and coordinating the deployed sidecar proxies with the microservices.

The control plane consists of one or more components that work together to manage network policies and sidecar proxy configuration. It centralizes information about the microservices and transmits it to the proxies so they can apply the rules that are defined at the service Mesh level.

This architecture enables centralized and consistent management of communication policies between microservices. It facilitates the implementation of network policies throughout the cluster.

These two architectures are not mutually exclusive; on the contrary, they complement each other. The data plane architecture controls the traffic between microservices, while the Control Plane architecture provides centralized management of policies and configurations for the sidecar proxies. The Control Plane architecture builds upon the Data Plane architecture by adding a layer of configuration centralization, making it easier to ensure consistency in the features offered by the sidecar proxies.

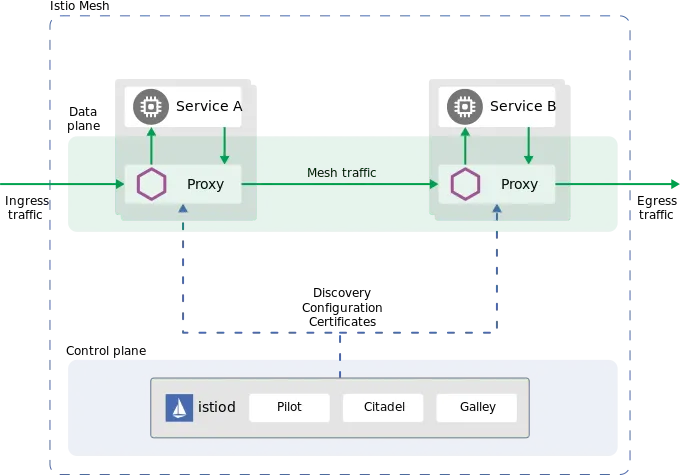

Most service Mesh adopt a Control Plane + Data Plane approach. (Istio, for instance, provides components like Pilot, Mixer, Citadel, and Galley at the control plane level), which can be described as follows:

For Istio, the architecture is described by the following diagram :

With the emergence of the service Mesh in Kubernetes clusters, the use of sidecar proxies has become a subject of concern. While the workload is only minimally impacted in terms of performance and resource usage, the cost of deploying numerous proxies, even if lightweight, can become significant in large Kubernetes clusters with thousands of pods.

Moreover, making changes to network routing rules, for instance, requires modifying the sidecar proxy, leading to the need for pod restarts. Although this may not be a critical issue, imagine a change that impacts all pods in a cluster, requiring the restart of all pods to apply the new network configuration, we will not enjoy it, for sure.

So the idea of delegating these functionalities to a lower level arises, such as by instrumentalizing the Linux kernel via eBPF for Cilium, or by using transparent network tunnels for the microservice, which will then pass through the service Mesh control plane.

Another challenge induced by proxies is that, regardless of the specific functionality you want to utilize, the sidecar proxy carries all the dependencies necessary for all features of the service Mesh. In other words, if you only want to use the network encryption feature, the proxy will still include all other dependencies related to routing, observability (metric export), and other features that may not be immediately needed. This increases the attack surface of the pod (even if you are confident in your chosen service Mesh implementation, using unnecessary components will increase the number of required security updates over time).

The new model without sidecar, regardless of its implementation, aims to achieve the following goals:

In the case of the Ambient implementation of Istio, this is achieved by:

We will resume the different features seen in our article on what a Cloud Native Application is, where we had already mentioned the services Mesh to help us in a microservices architecture:

In a zero-trust architecture approach, encrypting inter-service communications is a fundamental functionality of a service Mesh. It is essential that mutual Transport Layer Security (mTLS) encryption between pods is easy to configure and operates transparently. The setup of communication encryption should be simplified without requiring complex configurations. Additionally, the option to make encryption optional for applications that do not yet support TLS is crucial. This allows for specific cases, such as application migrations, where encryption may not be immediately feasible. If a permissive mTLS mode is available, it should be used only temporarily and limited to migration phases.

Service Mesh routing rules should enable the implementation of advanced security policies between different Kubernetes components. It is essential to have fine granularity in defining these security policies (the "least privilege principle") to precisely control communications between services. For example, it might be necessary to restrict communication between specific services or define security policies at the namespace level. A generic configuration capability in the service Mesh offers additional flexibility to apply security policies consistently and at scale.

The ability to observe and understand communications between services is crucial for the proper functioning of a system based on a service Mesh. Easy identification of communication flows between different services and rapid detection of potential errors or malfunctions are essential. Real-time graphical visualization of communications is a valuable asset that facilitates system operations and troubleshooting. It allows for an intuitive view of data flows, identification of bottlenecks, and monitoring of overall network performance. Tools like Kiali, easily integrated with Istio, or Hubble for Cilium provide such visualization.

Adding an authentication layer between microservices enhances the overall security of the system. This ensures that only authorized services can communicate with each other, verifying the identity and legitimacy of the involved parties. Authentication becomes particularly important when some components do not support recent authentication mechanisms. A service Mesh easily allows adding this additional authentication layer, reinforcing the overall security of the application architecture.

Service Meshes offer advanced deployment functionalities, including "canary" and "Blue-Green" deployments. These deployment modes enable more sophisticated strategies than the basic Kubernetes Rollout deployment. "Canary" deployment involves deploying a new version of an application to a portion of traffic, while "Blue-Green" deployment involves switching all traffic to a new version of the application before removing the old version. These deployment modes offer better update and deployment management, minimizing service interruptions and risks associated with software updates. Note that there are other deployment strategies that service Meshes can handle, such as Recreate, Rolling, A/B testing, Blue/Green Mirroring (traffic shadowing), and more.

It is essential to choose a service Mesh solution that has proven itself and is used in production by many users. A mature solution typically offers increased stability and reliability, as well as an active community ready to help with difficulties. Relying on an established community provides access to advice, best practices, and proven problem resolutions.

When dealing with a large number of workloads or microservices, adding sidecar proxies for each service can lead to cost and environmental impact overload. The capability to function without sidecar containers offers significant advantages by reducing complexity and resource requirements while optimizing performance and efficiency.

Integrating an ingress controller or gateway functionality within the service Mesh offers practical benefits. It eliminates the need to add an extra component to manage cluster entry points while providing better observability. Having a centralized view of access from outside the cluster makes monitoring and tracing communications easier, leading to improved security management and overall visibility.

Installing a service Mesh should not be a burdensome task, especially for basic features like communication encryption. The chosen solution should offer a simplified installation experience with clear and well-documented processes. Minimizing installation and configuration time allows for faster adoption and enables teams to move on to other critical tasks.

A service Mesh should be easy to operate without being an obstacle to your time-to-market. The operations team should be able to understand cluster activities easily and perform management, monitoring, and troubleshooting tasks with minimal difficulty. A user-friendly interface, intuitive management tools, and clear documentation contribute to ease of use.

If you use multiple clusters and need to establish secure communications between them, the ability to set up a multi-cluster service Mesh (Cluster Mesh) is an essential feature. This ensures consistency and security of communications across different clusters, eliminating the need to set up specific solutions for each cluster. A multi-cluster implementation simplifies global network management and ensures reliable and secure connectivity between environments.

Comprehensive and clear documentation is a critical factor when choosing a service Mesh. Good documentation should provide concrete examples, detailed installation guides, and practical demonstrations. Quality documentation demonstrates the maturity of the solution and facilitates adoption by helping users quickly understand key concepts, features, and best practices. Precise documentation makes it easier to resolve issues, configure, and make the most of the chosen service Mesh.

A frequently updated comparison of different service Meshes, which does not go into the details of the comparison, is available at servicemesh.es.

Please note that in our series where we will delve deeper into service Meshes, their installation, and functionalities, we will not review all service Meshes, but only the most mature ones: Istio, Cilium, LinkerD and Kuma.

In conclusion, the microservices architecture presents numerous challenges in terms of manageability and securing workloads. Service Meshes are tools that facilitate the implementation of distributed architectures into our infrastructure, while adhering to the DRY (Don’t Repeat Yourself) and KISS (Keep It Simple, Stupid) principles.

To choose the right implementation suitable for your use cases, it is essential to define your priorities among the features offered by different service Meshes and evaluate your specific needs. Manageability and ease of use should be key criteria in your choice, as these tools are designed to simplify the implementation of your platform, and mitigate the challenges of microservices architecture.

It is important to note that this article covered the general concepts of service Meshes and mentioned some popular solutions such as Istio, Cilium, LinkerD and Kuma. In our in-depth series on service Meshes, we will explore these mature solutions in detail.

Ultimately, by understanding your requirements and taking the time to analyze the different options, you will be able to choose the service Mesh that best fits your needs and create a robust, secure, and easy-to-manage microservices architecture.